Introducing sudofox/blockdevdiff

I made something new today!

This tool is meant to help with resuming an interrupted disk clone (e.g dd). It's extremely efficient for volumes of any size (written for operation on a 24 TB volume after something crashed)

Usage

Usage: ./blockdevdiff.sh </dev/source_device> </dev/target_device> <starting offset> <jump size> <sample size> [email address to notify]

INFO] ===== Block Device Differ ===== [INFO] sudofox/blockdevdiff [INFO] This tool is read-only and makes no modifications. [INFO] When the rough point of difference is found, reduce the jump size, [INFO] raise the starting offset, and retest until you have an accurate [INFO] offset (measured in bytes). [INFO] Recommended sample size: 1024 (bytes) [INFO] Starting time: Fri Nov 30 21:34:10 EST 2018 [INFO] Source device: testfile1.bin [INFO] Target device: testfile2.bin [INFO] Starting at offset: 0 [INFO] Jump size: 100 [INFO] Sample size: 10 [INFO] testfile1.bin is not a block device [INFO] testfile2.bin is not a block device [INFO] Starting... [PROGRESS] Offset 25000 | Source 6fbb8d...| Target 858a9a... [INFO] Sample differed at position 25000, sample size 10 bytes ======== FOUND DIFFERENCE ======== Ending time: Fri Nov 30 21:34:12 EST 2018 Found difference at offset 25000 SOURCE_SAMPLE_HASH = 6fbb8d9e8669ba6ea174b5011c97fe80 TARGET_SAMPLE_HASH = 858a9a2907c7586ef27951799e55d0e8

Translating to a dd

I am not responsible for if you destroy your data doing this

Let's say we started with the following command:

dd if=/dev/source_device_here of=/dev/target_device_here bs=128k status=progress

Somewhere between 16 and 19 terabytes into the process, your server crashes. Perhaps your RAID card overheated. Now what?

Well, we can use our handy blockdevdiff tool to find out roughly where the data starts to diff. Start proportional to how big your volume is; arguments for blockdevdiff are in bytes.

Start big, using a skip size of ~50 GB or so, and then when you start getting different data, set your start size to the point you hit minus the skip size, reduce the skip size, and run it again.

[INFO] ===== Block Device Differ ===== [INFO] sudofox/blockdevdiff [INFO] This tool is read-only and makes no modifications. [INFO] When the rough point of difference is found, reduce the jump size, [INFO] raise the starting offset, and retest until you have an accurate [INFO] offset (measured in bytes). [INFO] Recommended sample size: 1024 (bytes) [INFO] Starting time: Fri Nov 30 21:43:08 EST 2018 [INFO] Source device: /dev/sda [INFO] Target device: /dev/sdb [INFO] Starting at offset: 17003360000000 [INFO] Jump size: 1000000000 [INFO] Sample size: 100 [INFO] Starting... [PROGRESS] Offset 17074360000000 | Source 684146...| Target 6d0bb0... [INFO] Sample differed at position 17074360000000, sample size 100 ======== FOUND DIFFERENCE ======== Ending time: Fri Nov 30 21:43:28 EST 2018 Found difference at offset 17074360000000 SOURCE_SAMPLE_HASH = 68414605a320573a0f9ad1c8e71ab013 TARGET_SAMPLE_HASH = 6d0bb00954ceb7fbee436bb55a8397a9

Keep going until you get close enough to a starting point which is reasonable for your volume's size.

Once you have your number, round it down generously. I rounded mine down a few hundred gigabytes just to be sure: it's better to start too early than too late.

Here is your new command (DO NOT COPY AND PASTE)

dd if=/dev/source_device_here of=/dev/target_device_here bs=128K conv=notrunc seek=XXXXXXXXX skip=XXXXXXXXXXX iflag=skip_bytes oflag=seek_bytes status=progress

if: input file (e.g. a device file like /dev/sda)

of: output file

Apparently conv=notrunc doesn't really make any difference for actual block devices, so just leave it in.

If you are using this on VM images stored on another filesystem then you DEFINITELY want it.

Pass the iflag=skip_bytes and oflag=seek_bytes, so that we can use bytes instead of blocks here, which makes things less confusing overall.

seek: dictates the position to start copying bytes from the source device

skip: dictates the position to start copying bytes to the target device

seek and skip should be the same!

status=progress: so you can actually see what dd is doing

"Email when done" functionality

This will require some installed mailserver (e.g. Exim, Postfix, etc) so that the "mail" binary will function. In cases where you need to get a really specific offset on a really big volume, you can pass one final argument containing an email address.

You will be emailed when blockdevdiff has finished.

I got the last 8TB drives that I needed for the Flipnote Hatena archive!

Happy Thanksgiving! I had a lovely time with my family.

I don't really do Black Friday shopping, but I did make one stop: I now have the final 3 8TB drives that I need to build a second, actually redundant RAID array to copy the Flipnotes from the RAID-0 array to! This is every Flipnote from Flipnote Hatena and Flipnote Gallery: World. The three drives (and $5 St. Jude donation) cost me $640.97, with parts and bandwidth and hardware the archive project is running me almost $2000 so far.

I got my Japanese DSi with UGOMEMO-V1

This has the very first public version of Flipnote Studio on it.

Tomorrow marks the start of the Sudomemo Daily Draw!

Sunday will be the first Sudomemo Daily Draw. This daily event, open to anyone from Hatena or Sudomemo, is meant to be a fun way to encourage artists to try new ideas, and see other people's interpretations of the Daily Topic!

I'm looking forward to seeing this grow :)

If you're reading this, you are absolutely welcome to join in!

Hatena Haiku Anti-Spam

I've developed a service called Hatena Haiku Anti-Spam. I've been testing it extensively over the past six months or so, and it's now at the point of extremely high accuracy.

History

Hatena has a spam issue. Not a small one, either. It affects most of the services that they provide, especially Haiku, Bookmark, Blog, Anond, Question, and a few more. Fotolife is included, although that is a different kind of spam which I am investigating.

While there's been some attempts at stopping it, it has come in the form of small mitigations (e.g the Humanity Quiz, used as a barrier to initial entry to some services, like Haiku, but with old accounts grandfathered in. I've found that it can be inconsistent in its behavior, and sometimes does not verify a user which has submitted three or more correct answers.)

The spam posted to Haiku and other services is extremely consistent in nature. I presently work at a managed web hosting company and have had some experience with the combination-Bayesian and regex-based filtering engine called Apache SpamAssassin. I decided to retool SpamAssassin to do filtering of Hatena Haiku posts, so I could use the service with less interruption!

From Emails to Haikus

One of the main issues is that SpamAssassin is designed specifically for email filtering; as such, it expects input formatted like an email message.

For example (original post):

Delivered-To: site@h.hatena.ne.jp

Received: by sudofox.spam.filter.lightni.ng (Sudofix)

id 81807923680507219; Fri, 08 Jun 2018 09:12:22 -0400 (EDT)

From: austinburk@h.hatena.ne.jp (Sudofox)

To: site@h.hatena.ne.jp

Subject: 食べた

Content-Type: text/plain; charset=UTF-8

Message-Id: <20180908091222.81807923680507219@sudofox.spam.filter.lightni.ng>

X-Hatena-Fan-Count: 212

Date: Fri, 08 Jun 2018 09:12:22 -0400 (EDT)

食べた=That looks AMAZING QoQ

We have the bare minimum headers for spamd (the service running behind SpamAssassin) to parse it, along with one extra, X-Hatena-Fan-Count.

SpamAssassin user_prefs and Custom Rules

Here is my user_prefs file. If you start reading after "Begin Sudofox config", you will see some of the rule changes I made in order to make it more suitable for filtering messages from Haiku, including disabling all email-related checks (e.g SPF, RBLs, DKIM) as well as some changes to suit the primary user base of Haiku better (these changes are above the "Begin Sudofox config", but are noted)

You'll also see a number of rules that I developed for SEO spam that is specific to Haiku - usually related to "view sports/television without paying" spam.

Spam users rarely have any fans at all, which is a metric we can use for filtering. That's what X-Hatena-Fan-Count is for:

header SUDO_HATENA_ZERO_FANS X-Hatena-Fan-Count =~ /^0$/

score SUDO_HATENA_ZERO_FANS 1.0

describe SUDO_HATENA_ZERO_FANS User has no fans

header SUDO_HATENA_FEW_FANS X-Hatena-Fan-Count =~ /^([1-9]{1})$/

score SUDO_HATENA_FEW_FANS -1.0

describe SUDO_HATENA_FEW_FANS User has 1-9 fans - spam less likely

header SUDO_HATENA_10_PLUS_FANS X-Hatena-Fan-Count =~ /^([0-9]{2})$/

score SUDO_HATENA_10_PLUS_FANS -2.0

describe SUDO_HATENA_10_PLUS_FANS User has 10-99 fans - spam unlikely

header SUDO_HATENA_100_PLUS_FANS X-Hatena-Fan-Count =~ /^([0-9]{3,})$/

score SUDO_HATENA_100_PLUS_FANS -10.0

describe SUDO_HATENA_100_PLUS_FANS User has 100+ fans - prob legitimate

I suppose if they read my blog post, they could adapt, but the actual spam-content usually scores much higher than the -2 points that having them add each other as fans could do.

The source code is here. As it started as an experiment, it is a bit messy; however, it has grown to a service that Hatena users actually use (via the API and UserScript), so I plan to rewrite the entire thing soon.

This repository contains only the spam-classification code and spamd-related things.

Website

Hatena Haiku Anti-Spam has two sections which you can browse: The main summary page, and the user information page.

The main page has two pie charts, with the one on the left showing recent spam-users, and the one on the right showing the recent legitimate posts. The time period displayed is twenty-four hours, and you can click on any slice of the pie to be taken to the user information page.

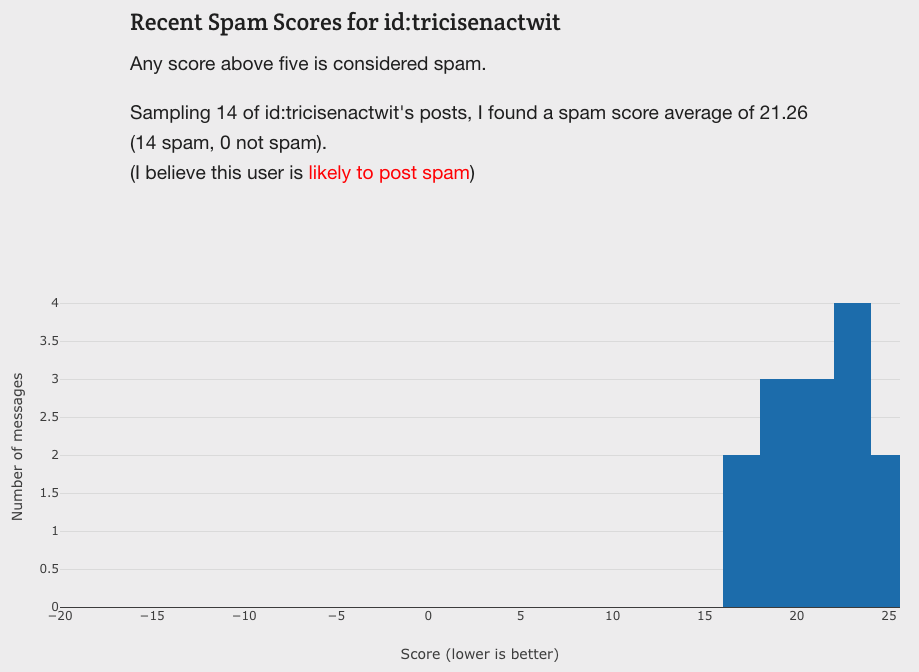

The user information page has a graph of overall scores for the user, show below:

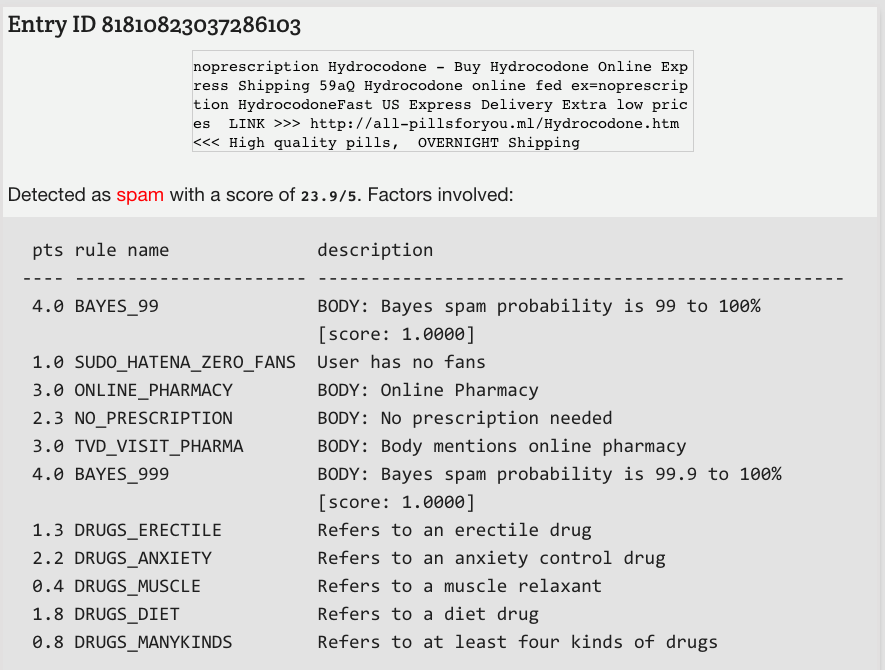

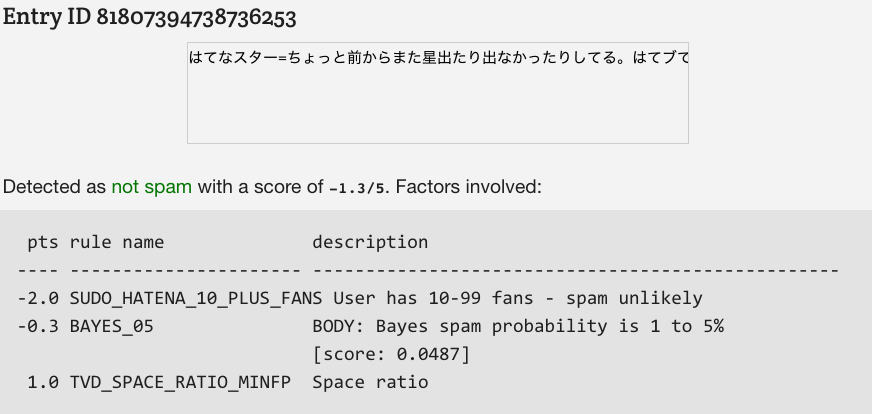

It also has a sample of ten posts and how they were classified. To avoid republishing the actual spam content, I take a sample of the text, store it as a base64-encoded snippet, and render it onto an HTML canvas so that you can view it.

Here is a recent example (there's been a lot of drug-related spam recently, which is even easier to filter):

Here is an example of a legitimate message:

API

Hatena Haiku Anti-Spam has an API that you can use.

The main one in use for spam filtering is the Recent Scores API:

https://haikuantispam.lightni.ng/api/recent_scores.json

This gives the recently-posting Hatena IDs and their scores. Any score above 5 should be considered spam.

And this one is the Recent Keywords API:

https://haikuantispam.lightni.ng/api/recent_keywords.json

Due to the nature of keywords on Haiku, I can't as easily label a particular keyword as bad or good. The scores here are obtained via averaging the recent scores for posts made under that keyword. Still, it can be useful for updating the keyword list on the right-hand side of the page.

UserScript

id:noromanba has developed an excellent UserScript that makes use of the Recent Scores API and filters spam when browsing on Hatena Haiku. I recommend installing it!

(You can use it with Tampermonkey, Violentmonkey, or Greasemonkey. I personally use Tampermonkey, which is compatible with all modern browsers.)

The script is linked on the top of the page:

Here is a direct link:

Rich Content Tags for Slack

This is a limited-use feature, but I noticed that whenever I reported a user to Hatena with a link to Haiku Anti-Spam, I got a little hit from Slackbot (they are using Slack). As such, I added tags to give a summary of the user as classified by Haiku Anti-Spam:

Going Forward

I've been really busy managing my many different projects, but I will certainly continue to maintain and improve Haiku Anti-Spam. What I'm really hoping for is to work with Hatena to develop an accurate and scalable spam detection system for their services, or at the very least provide an inspiration and an example for how it can be done.

Some of the things I'll be updating with Hatena Haiku Anti-Spam soon are:

- How I store posts used for classification

- Database: Right now I'm using SQLite, as it is portable, but it does not always scale well over time. I can already see Haiku Anti-Spam lagging from time to time (the API caches results and is updated independently of the user's request, and as such isn't subject to the lag). I plan to move the application to MySQL.

- Haiku Anti-Spam backend and site merge: Right now, the only code on GitHub is that which powers the backend, but not the site frontend. Once I complete the move to MySQL, eliminating the direct file-access/paths involved in the service, I plan to put the website portion on GitHub as well.

Can you help?

I'm still learning Japanese, but it is a difficult process. I would appreciate if someone who is fluent in both languages would translate this article to Japanese, so that I can publish it in both languages.

Please bookmark this blog post!

I love Hatena, and I treasure the people that I've met here. Hatena builds services that promote the community, and that's always been my focus. I hope this contribution will serve that purpose!

Working on my Hatena Profile

It's taken a while to do, but I think it's turning out pretty well so far!

Feel free to bookmark this post :>

Is this my new home..?

I'm moving into a new place (with less expensive rent) and it's already proving nicer than my old place. It has a dishwasher, too! I took over the lease from an ex-colleague who moved out to start his own business; the lease lasts through May of 2019.

I'm still working on moving, so there's a lot of boxes..

Technically, this is my new home, though it doesn't feel like it yet. The strange thing is, neither did my old apartment.

I have a sense of wanting to go someplace new, someplace beautiful. I am thankful for my current job, but I feel ready to move on to something different from a customer-facing system administrator (aka support technician).

I want to build and design new applications, and develop my skills. I want to do security research, and a lot of other things. I want to support the growth of artists and animators, as well. I can do most anything when I put my heart into it.

But right now I don't feel like where I am is the place I need to be. That, I suppose, is why I don't feel at home yet.

The important thing is that I treat my living space as my home. It needs to be a place I can look forward to going to at the end of the day; a place of rest and refuge.

At the end of the day, wherever God wants me to be, I'll do my best to honor Him by serving my employer to my absolute utmost ability, and do so with humility.